November 11, 2024

Choosing the Right AI Accelerator | NPU or TPU for Edge and Cloud Applications

Artificial Intelligence, or AI, is becoming a powerful engine in the world of technology. Allowing computers to complete tasks and make decisions can lead to a more safe and efficient world. Specialized processors like NPUs and TPUs are increasingly accelerating computational tasks to help AI function efficiently. The mainstay central processing unit (CPU) which has been powering computers for years. It can run computations but it’s not as efficient or as fast as an optimized chip like an NPU or TPU for AI operations. As the world is progressing towards a heavier demand for AI computations, specialized and dedicated processors are coming into play.

What is a Neural Processing Unit (NPU)?

A Neural Processing Unit (NPU) is a specialized computer hardware accelerator that optimizes neural network computations for AI and machine learning (ML). NPUs accelerate tasks such as image recognition, speech processing, and data analysis by mimicking the human brain’s neural networks. These networks consist of layers of interconnected nodes that process and transmit information. NPUs handle these tasks much more efficiently than general-purpose processors, like CPUs, by executing specific AI operations faster and more effectively.

They excel at running inferences on pre-trained models but are less effective for training models or handling complex data preprocessing tasks, which often require flexibility and substantial computational power. NPUs are commonly used in embedded devices. They integrate directly into system-on-chip (SoC) designs or as expansion cards in form factors like Mini-PCIe or M.2.

Using an NPU

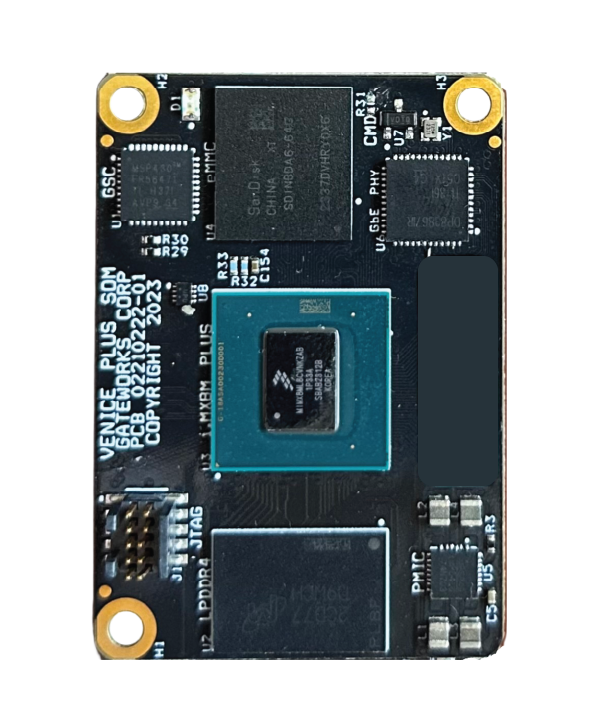

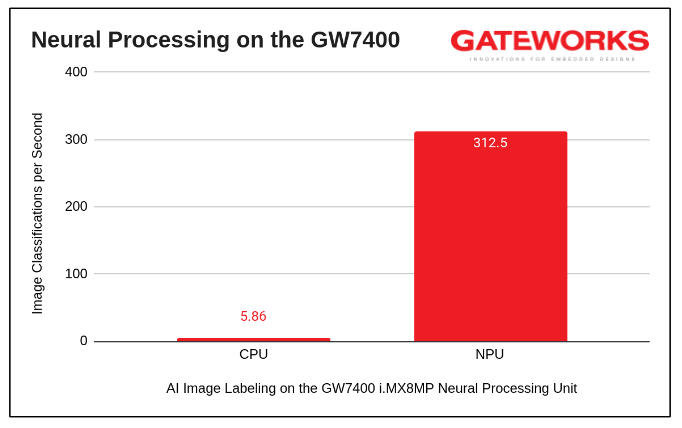

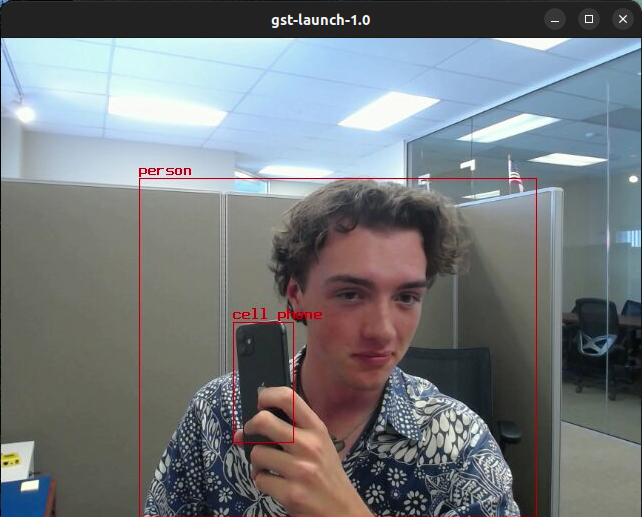

For example, the NXP i.MX 8M Plus processor used on the Gateworks family of Venice single board computers (SBCs) includes a built-in NPU. This NPU can increase calculation times by over 53 times the main CPU. Specific software and drivers tap into the NPU, allowing Python scripts and GStreamer pipelines to invoke the NPU power.

Gateworks Venice SBCs equipped with the i.MX 8M Plus processor can run AI applications such as real-time object detection in video feeds or voice command processing while maintaining a low power footprint. Find detailed examples on the Gateworks NPU wiki page.

For solutions not utilizing the i.MX 8M Plus processor, an external NPU Mini PCIe card can be used with Gateworks SBCs, such as a Hailo AI card. Boasting an amazing 26 TOPS (trillion operations per second), this powerful NPU card is another way to quickly process AI computations. Detailed examples are provided on the Gateworks Hailo AI Wiki page.

What is a Tensor Processing Unit (TPU)?

A Tensor Processing Unit (TPU) is a hardware accelerator developed by Google to accelerate machine learning workloads, specifically those using TensorFlow. TensorFlow is an open-source machine learning framework that enables building, training, and deploying AI models across platforms. Instead of focusing on the large floating point accuracy of a graphics processing unit (GPU), the TPU uses lower precision 8-bit integers to achieve faster computation times. TPUs also leverage systolic arrays, providing high-performance matrix multiplication operations. This makes the TPU good for deep learning model training and inference.

For cloud-based AI applications, TPUs provide the massive computational power needed to process large datasets and train complex models. Google Cloud offers TPUs that scale to handle large-scale AI workloads. For edge AI, the Google Coral TPU, with 4 TOPS, is optimized for low-power, high-efficiency tasks like image classification and real-time video analysis in devices like smart cameras, drones, and robotics.

Using a TPU

The Google Coral TPU is an edge TPU that features 4 TOPS and plugs into a Gateworks SBC. You can use both Python and GStreamer to leverage the TPU for running image, video, and data inferences. Find detailed examples on the Gateworks TPU Wiki page.

For cloud computing, see Google Cloud TPUs

NPU or TPU: Which One Should You Choose?

| CRITERIA | NPU | TPU |

|---|---|---|

| Best For | Real-time AI inference on edge devices | Deep learning model training and inference in the cloud |

| Power Efficiency | Excellent for low-power, battery-powered devices | Moderate power consumption (edge TPUs are efficient, but cloud TPUs are high-power) |

| Use Case | Image classification, speech recognition, anomaly detection | Large-scale model training, video/image processing |

| Performance | Excellent for real-time, low-latency applications | High throughput for deep learning tasks and large-scale inference |

| Software Ecosystem | Supports a wide range of AI frameworks (TensorFlow, PyTorch, etc.) | Primarily optimized for TensorFlow |

| Integration in Products | Often embedded in SoCs (ex. NXP i.MX 8M Plus) | Available as standalone cards (ex. Google Coral TPU), or cloud-based platforms (ex. Google Cloud TPUs) |

Conclusion & Recommendations

Ultimately, both NPUs and TPUs offer specialized benefits for accelerating AI tasks. For embedded systems, such as IoT devices or industrial robots, energy efficiency and real-time processing are crucial. NPUs are often the better choice. They provide high-performance inference with low power consumption, making them ideal for edge devices. These systems need to run AI tasks locally with minimal latency. NPUs integrate well into system-on-chip (SoC) designs, making them suitable for devices with limited space and power.

In contrast, the TPU shines in cloud computing for training deep learning models and handling large datasets. For cloud-based applications, large-scale data processing and model training are common. TPUs excel in these environments. They handle massive datasets and complex models efficiently. Their architecture allows for high throughput and parallel processing. Cloud platforms can support the higher power and resource needs of TPUs.

Both chips solve operations for AI use on the edge and both use the TensorFlow software library. While a few embedded TPUs have made their debut, the NPU sees much broader use in embedded environments. The NPU serves as the more generic and widely used term for a hardware AI processing chip. You will encounter it more frequently, such as inside the NXP i.MX 8M Plus processor used on Gateworks SBCs.

If you’re working with edge devices, consider an NPU for its energy efficiency and ability to handle AI inference tasks. For cloud applications or large-scale deep learning, a TPU may be the better choice. Regardless of the chosen chip, analyze the manufacturer’s software libraries and examples to ensure the capabilities meet your project requirements. If the TOPs rating on an internal NPU is not sufficient enough for heavy processing, consider an external NPU offered on an M.2 card.

Resources and Further Reading

- Gateworks NPU Wiki – Learn how to leverage the NPU on Gateworks SBCs.

- Gateworks TPU Wiki – Find detailed instructions for using TPUs with Gateworks SBCs.

- Gateworks Hailo AI Wiki – Explore the Hailo AI NPU integration with Gateworks systems.

- Gateworks NPU Primer – A deep dive into using NPUs for machine learning with Gateworks SBCs.

- TensorFlow – Learn more about TensorFlow on NPUs and TPUs, particularly for model training and inference.

Contact Gateworks to discuss how an SBC can be utilized for your next AI project.

- Venice GW7100 – 1x Ethernet, 1x Mini-PCIe, 1x USB

- Venice GW7200 – 2x Ethernet, 2x Mini-PCIe, 1x USB

- VeniceFLEX GW8200 – 2x Ethernet, 2x Flexible Sockets, 1x USB

- Venice GW7300 – 2x Ethernet, 3x Mini-PCIe, 2x USB

- Venice GW7400 – 6x Ethernet, 3x Mini-PCIe, 1x USB, 1x M.2

- View all Gateworks SBCs here.